Summary and Implementation of Particle Swarm Optimization

Particle swarm optimization (PSO) was first proposed by James Kennedy and Russell Eberhart in 1995, also known as particle swarm algorithm.

The basic idea of the particle swarm algorithm comes from the study of the foraging behavior of bird flocks. Each particle can be regarded as a bird, and the process of searching for a solution is similar to the process of a flock of birds looking for food. In the process of searching for food, each bird knows the best location it has found. At the same time, information is shared within the flock, so that each bird also knows the best location the entire flock has found.

The advantage of PSO is that it is simple, easy to implement, has fewer parameters that need to be adjusted, and has better global optimization capabilities.

Basic Concept

The position of the -th particle:

The velocity of the -th particle:

Each particle has three properties: speed, position and fitness. The velocity represents the distance and direction of movement of the particles, and the position is the solution to the corresponding practical problem. The above formula gives the position and velocity of the particle including D-dimensional components. For example, for the function , the position of a particle can be expressed as At this time, the speed and position of the particle are both 2-dimensional. Fitness is calculated by the fitness function and is used to evaluate the quality of a particle.

The particle swarm algorithm first needs to initialize a group of particles, randomly initialize the position and speed of each particle, and then search for the global optimal solution through continuous iteration. In each iteration, the particle updates its speed and position by tracking two "extreme values" (individual extreme value , group extreme value .

Velocity Update

The relevant variables in the formula have the following meanings:

: The -dimensional velocity component of particle at the -th iteration

: The -dimensional position component of particle at the -th iteration

: Inertia factor ()

, : Learning factor}

, : Random numbers in the interval to increase the randomness of the search

: The -dimensional position component of the individual extreme value of particle after the first iterations}

: The -dimensional position component of the population extreme value of the entire particle population after the first iterations

Inertia term: reflects the influence of the particle’s last velocity.

Self-cognition items: the particle’s own experience, the distance and direction between the particle’s current position and its historical optimal position.

Group cognition items: information sharing among all particles, the distance and direction between the current position of the particle and the historical optimal position in the population.

For each dimensional component of each particle's velocity, it needs to be updated according to this formula. It can be seen from this formula: Next movement direction of the particle Inertial movement direction Optimal movement direction recognized by itself Optimal movement direction recognized by the group.

Position Update

For each dimensional component of each particle's position, it needs to be updated according to this formula. The position of each particle is the solution to the actual problem, so the position update process of the particles is the search process for the solution. Every time the particle updates its position, it needs to calculate the fitness and update the individual extreme value and the group extreme value .

Parameter Settings

Particle Swarm Size

When the population size is small, the algorithm easily falls into local optimality, while when the population size is large, the search efficiency can be higher, but the amount of calculation in each iteration will also increase. Usually when the population size increases to a certain level, the effect of increasing the population size will be limited.

Inertia Factor

Also called inertia weight, the larger the value, the stronger the particle's ability to explore new areas. A better approach is to let the algorithm conduct more random explorations in the early stages of the search, while in the later stages it is more likely to use existing experience. This idea is similar to the balance between exploration and exploitation in Reinforcement Learning (RL).

The relevant variables in the formula have the following meanings:

: Maximum inertia weight

: Minimum inertia weight

: Current number of iterations

: Maximum number of iterations

Common adjustment strategies include linearly decreasing inertia weight: as the number of iterations increases, the inertia weight will continue to decrease, similar to the above formula.

Maximum Speed of Particles

If the speed of a particle exceeds the set maximum speed , the speed of the particle is set to . When is large, the particles fly fast and the algorithm has strong search ability, but it is easy to fly past the optimal solution. When is small, it is easy to fall into the local optimal solution.

Example

Objective Function

Next, we try to use PSO to find the maximum value of this function:

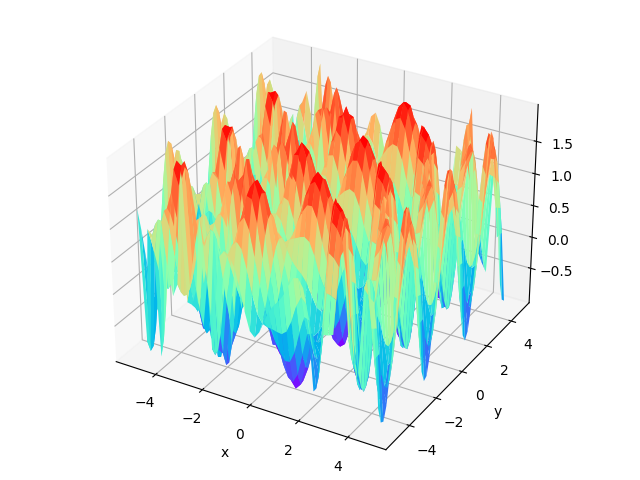

The graph of the objective function is as follows:

Result

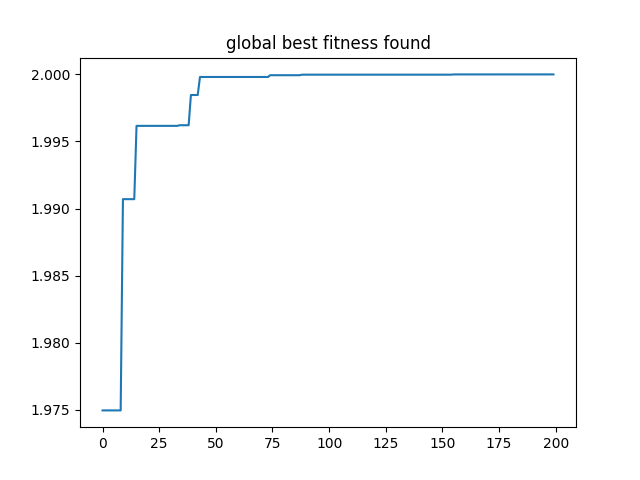

In the iterative process of the PSO algorithm, the fitness change process of the optimal solution found is as follows:

The optimal solution found by PSO is as follows:

1 | The optimal (maximum) solution found: [1.02996889 0.54137418] |

Code

The Python code of the PSO is here: Optimization/metaheuristic_algorithms/Particle_Swarm_Optimization/PSO.py (github.com)